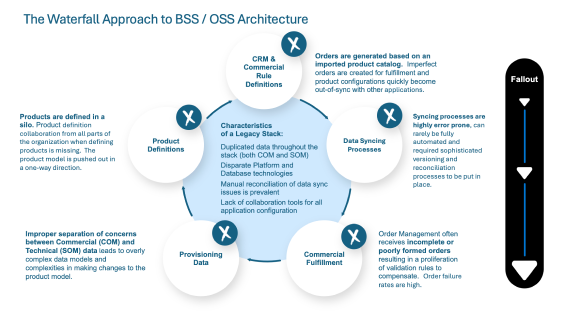

Those of us who have been involved in BSS / OSS transformation projects around the world over the past several decades carry the scars of overly complex projects going wrong - they often took years to materialize, repeatedly missed production deadlines and once delivered were immalleable and fraught with configuration and integration complexities. I often look back and question what benefits the transformation really brought about other than to modernize the underlying technology base and retire some aging infrastructure. The promised and ever elusive goal of improved time-to-market was rarely realized.

So, how can things be different now? What lessons learned have actually materialized in the offerings from mainstream vendors? Rest assured, the approach to transformation projects and available technologies has certainly changed. There truly is light at the end of the tunnel if we choose the right vendor(s) and stick with the right transformation principles.

The Shift away from a Best-of-Breed Approach

In the not so distant past, enormous amounts of time and money were put into evaluating vendor offerings (point solutions) to try and determine the so-called “Best-of-Breed” vendors that would participate in transformation projects. A telco for example, would evaluate and pick the best Customer Relationship Management (CRM) software from one vendor, the best Logical / Physical Inventory system from another, the best Order Management Application from a third, the best billing solution from a fourth and so on.

Once the vendor selection was complete, the real work began:

- Attempting to corral vendors into cooperating with one another,

- Encouraging them to stick to their own functional turf in the drive to decide which application would support which function (they invariably had degrees of functional overlap),

- How to get end-to-end features to work across all participating applications, and

- How data would be synced between them.

Each vendor invariably had a different ui/ux experience and underlying platform technologies which meant service providers had application licenses to coordinate and large numbers of users to train.

Recall that a significant part of the overall effort was also in implementing logic in the integration layer to iron out wrinkles with disparate application behaviors (e.g. Add action in application A = New action in application B to cite a very simple example among many other complex ones). Integration logic changes were often considerable and ubiquitous when making adjustments to existing offerings and more extensive when introducing new ones.

One other characteristic was also prevalent - applications were driven off their own data model which needed to be updated each time there was a change made to a dependent configuration elsewhere in the stack. Failure to do so in a timely and coordinated manner resulted in fallout which required manual work to resolve.

These challenges were invariably costly in terms of time and resources, difficult for internal teams to coordinate end-to-end, and ultimately resulted in configuration updates taking weeks or in some cases even months to complete, with testing comprising a large part of the effort due to the fragility of the stack. Clearly some changes to the approach to BSS / OSS architecture were in order.

Figure 1: Waterfall Approach to BSS / OSS

Catalogue Driven Architectures

In the early 2000’s the concept of catalog-driven applications began to emerge as a more formal way to deal with disparate data sources that easily fell out of sync with one-another. The premise was that if data was centralized (or mastered) in a specific location, changes to that data could be more easily pushed out to BSS and OSS systems, resulting in improved time-to-market and a reduction in fallout.

The challenge was that the syncing process was highly error prone, could rarely be fully automated and required sophisticated versioning and reconciliation processes to be put in place. Sadly, vendors were able to claim they were catalog-driven with such architectures by making little to no modifications to the way their software worked. Applications simply had to import and extract the bits of data from the catalog that were relevant to that application and further enrich the data as needed. By the time applications were ready for testing, a new permutation of the product set was ready to be sent out and the whole process had to be repeated again until the catalog could be stabilized. As a result, configurations would often be deployed to production containing errors that could only be caught when there were live orders running through the system.

We refer to this approach to BSS / OSS architecture as the waterfall approach (see Figure 1) and it did not lead to improvements in TTM. The industry quickly realized that more needed to be done. Duplication of validation rules throughout the stack, disparate platform and DB technologies, and lack of collaboration tools for application configuration specialists remained contributing factors to transformation failures. Each of these challenges needed to be addressed in a much more meaningful way.

As the industry began to rethink these architectures the right questions began to emerge:

- What if the applications in the BSS layer were driven directly off a single shared master product repository and were not allowed to hold their own copy of the data model?

- What if the moment a product in the master changed it was visible to every application in the ecosystem in real time and a notification was sent to interested parties about the change?

- Could some integration logic be eliminated if all applications shared the same specification of the various master entities (products, quotes, orders)?

- What if complex features were thought of holistically, centrally designed and implemented by each application in the ecosystem according to a single behavioral standard?

- What if all applications provided the same user experience no matter the role being performed?

Implementing this vision meant leaving the traditional patch work of best-of-breed applications behind in favor of a completely new approach that would highlight the importance of an exclusive master data model directly driving application behavior and a focus on ecosystem features rather than individual application features.